- You have no items in your shopping cart

- Subtotal: $0.00

[ad_1]

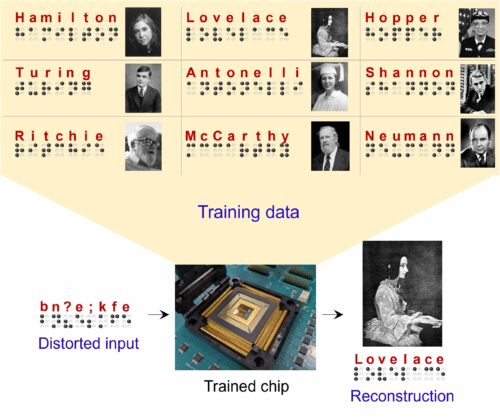

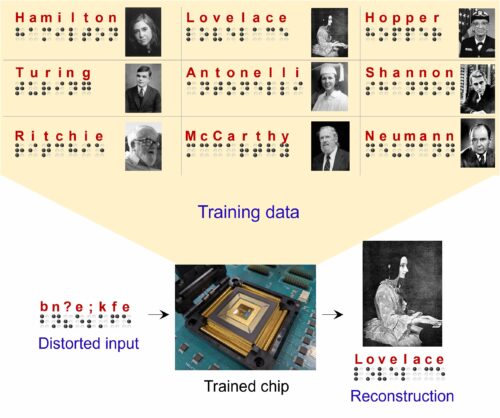

Researchers have developed a deep learning method using memristor crossbars that enables error- and noise-free, high-precision computations.

Machine learning models have proven to be very valuable tools for making predictions and solving real-world tasks involving data analysis. But this can only be done if they are given a lot of training which may cost time and energy. Researchers at Texas A&M University, Rain Neuromorphics and Sandia National Laboratories recently developed a new system for training deep learning models more efficiently and on a larger scale.

This approach reduces the carbon footprint and financial costs associated with training AI models, thus making their large-scale implementation easier and more sustainable. To do this, they need to overcome two key limitations of current AI training practices. The first challenge is the use of inefficient hardware systems based on graphics processing units (GPUs), which are not inherently designed to run and train deep learning models. The second involves the use of inefficient and math-heavy software tools, especially the use of the so-called backpropagation algorithm.

The training of deep neural networks requires the continuous adaptation of its configuration, which consists of the so-called “weights,” to ensure that it recognizes patterns in the data with increased accuracy. This adaptation process requires many multiplications, which conventional digital processors have difficulty doing efficiently, because they need to get the information related to the weight from a separate memory unit.

“As a hardware solution, the analog memristor crossbars can embed the synaptic load in the same place where the computation takes place, thus reducing the movement of data. However, the traditional backpropagation algorithm, which is suitable for high-precision digital hardware, is not compatible with memristor crossbars because of their hardware noise, errors and limited accuracy.

The researchers developed a new co-optimized learning algorithm that takes advantage of the hardware parallelism of memristor crossbars. This algorithm, inspired by differences in neuronal activity observed in neuroscience studies, is tolerant of errors and mimics the brain’s ability to learn even small, poorly defined and “noisy” information.

The brain-inspired and analog-hardware-compatible algorithm was developed to enable energy-efficient AI implementation on edge devices with small batteries, thereby eliminating the need for large cloud servers that consume large amounts of electricity. . This will ultimately help make large-scale training of deep learning algorithms more affordable and sustainable.

[ad_2]

Source link