- You have no items in your shopping cart

- Subtotal: $0.00

[ad_1]

Researchers at Stanford University have developed a smart skin that can recognize objects just by touching them.

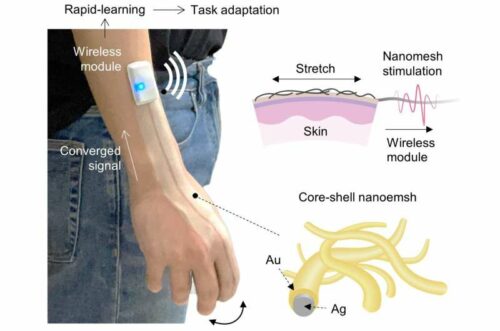

A group of researchers from Stanford University has developed a spray-on sensory system consisting of printed, bio-compatible nanomesh that is directly connected to a wireless bluetooth module and further trained through meta-learning. The device can recognize objects by touch alone, or allow users to communicate through hand gestures with apps in an immersive environment.

The device is a sprayable electrically sensitive mesh network embedded in polyurethane. The mesh is composed of millions of gold-coated silver nanowires that are in contact with each other to form dynamic electrical currents. This mesh is electrically active, biocompatible, breathable, and stays put unless washed with soap and water. It conforms well to the wrinkles and folds of every finger of the person who wears it. Then a light-weight Bluetooth module can be simply attached to the eye that can wirelessly transfer signal changes.

The spray method enables the device to work without the need for a substrate. This decision eliminates unwanted movement artifacts and allows them to use a trace of the conductive mesh to generate multi-joint information on the fingers.

The device works based on a machine learning algorithm. Computers monitor changing conductivity patterns and map the changes to specific physical tasks and movements. Type an X on the keyboard, for example, and the algorithm will learn to recognize that task from changing patterns of electrical conductivity. Eventually there will be no need for a physical keyboard. The same principles can be used to recognize sign language or even to recognize objects by tracking their external surfaces.

To meet this massive computational challenge and the need to compute large amounts of data, the Stanford team developed a computationally efficient learning method. This technology has a wide range of applications, for example it can enable new methods of computer animation or lead to new avatar-led virtual meetings with more realistic facial expressions and hand movements. .

Reference: Kyun Kyu Kim et al, A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition, Nature Electronics (2022). DOI: 10.1038/s41928-022-00888-7

[ad_2]

Source link